How Does InfiniBand Cabling Achieve Low Latency And High Bandwidth At Scale?

InfiniBand cabling is engineered to support extremely fast, predictable data movement across large scale computing and storage fabrics. It is commonly deployed in environments where application performance depends on consistent latency, high message rates, and sustained throughput across many nodes. By combining purpose built protocols with carefully designed copper and optical cabling, InfiniBand delivers scalable performance that general purpose networking technologies struggle to match.

Protocol Design And Cabling Relationship

InfiniBand was designed as a system level interconnect rather than a traditional packet network. Its transport model minimizes software overhead and enables direct memory access between endpoints. Cabling plays a critical role in this design by supporting clean, deterministic signal paths that allow the protocol to operate close to line rate without excessive buffering or retransmission.

Multi Lane Link Architecture

InfiniBand links operate using multiple parallel lanes, commonly in 1X, 4X, or 12X configurations. Each lane carries data simultaneously, increasing aggregate bandwidth while keeping per lane signaling manageable. InfiniBand cables are built with tightly matched differential pairs to ensure consistent timing across lanes, which is essential for maintaining synchronization and avoiding latency penalties as link widths increase.

Signal Integrity And Electrical Control

Low latency operation depends on stable signal quality. InfiniBand copper cables use controlled impedance conductors, shielding, and precise pair geometry to minimize crosstalk and attenuation over short distances. Optical InfiniBand cables extend this stability over longer runs by eliminating electrical noise entirely. In both cases, maintaining low insertion loss and tight skew tolerance allows switches and adapters to forward traffic without additional error correction delays.

RDMA And Reduced CPU Involvement

A major contributor to InfiniBand’s low latency is its native support for Remote Direct Memory Access. RDMA allows data to move directly between application memory spaces without heavy CPU involvement. Cabling reliability is critical here, since link errors would force retransmissions that negate RDMA benefits. High quality InfiniBand cables help maintain error free operation, preserving both latency and throughput at scale.

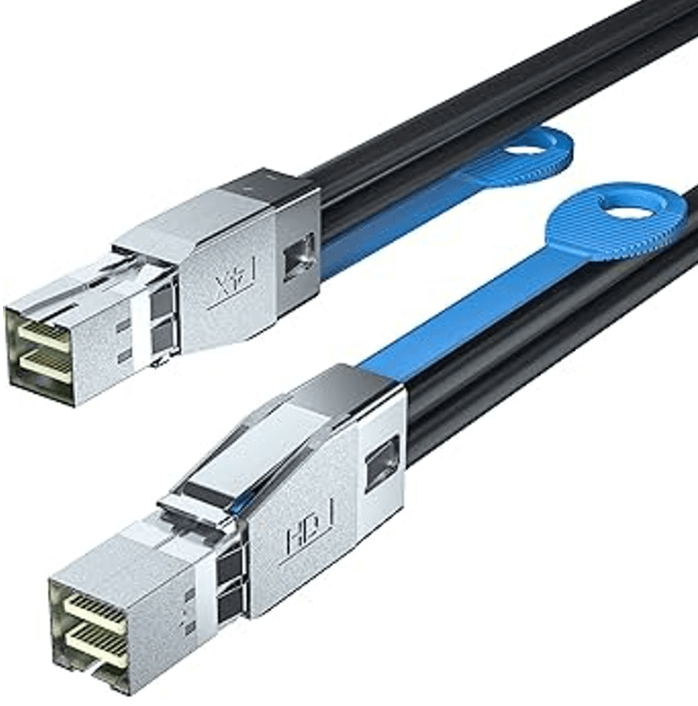

Copper And Optical Cabling Roles

Copper InfiniBand cables are typically used for short reach connections within or between adjacent racks. They offer low power consumption and minimal conversion overhead. Optical InfiniBand cables are selected for longer distances or large fabrics where signal integrity must be preserved across extended runs. Both cable types are designed to meet strict performance margins required for scalable InfiniBand deployments.

Scaling Across Large Fabrics

As InfiniBand fabrics grow to hundreds or thousands of nodes, consistent cabling performance becomes increasingly important. Variations in signal quality can introduce uneven latency or throughput bottlenecks. Standardized InfiniBand cable designs and defined length limits help ensure uniform behavior across the fabric, enabling predictable performance even as the network scales.

Common High Scale Use Cases

InfiniBand cabling is widely used in:

-

High performance computing clusters

-

AI and machine learning training systems

-

GPU interconnect fabrics

-

NVMe over Fabrics storage networks

These workloads depend on fast collective operations and synchronized data movement, which benefit directly from low latency, high bandwidth cabling.

Installation And Design Considerations

Proper cable selection, routing discipline, and connector handling are essential. Tight bends, excessive tension, or contamination can degrade signal quality and impact latency. Fabric designers should verify supported link widths, data rates, and cable lengths to ensure reliable operation across all nodes.

FAQ (Frequently Asked Questions)

Why does InfiniBand achieve lower latency than many Ethernet networks?

Because it uses streamlined transport mechanisms and RDMA, reducing protocol overhead and CPU involvement.

How does cabling affect InfiniBand performance at scale?

Consistent signal integrity across all links prevents retransmissions and timing variation that would increase latency.

Are optical InfiniBand cables faster than copper?

They are not inherently faster, but they maintain performance over longer distances without electrical interference.

Can InfiniBand scale without increasing latency?

Yes, when properly designed, InfiniBand fabrics scale efficiently while maintaining predictable latency and bandwidth.