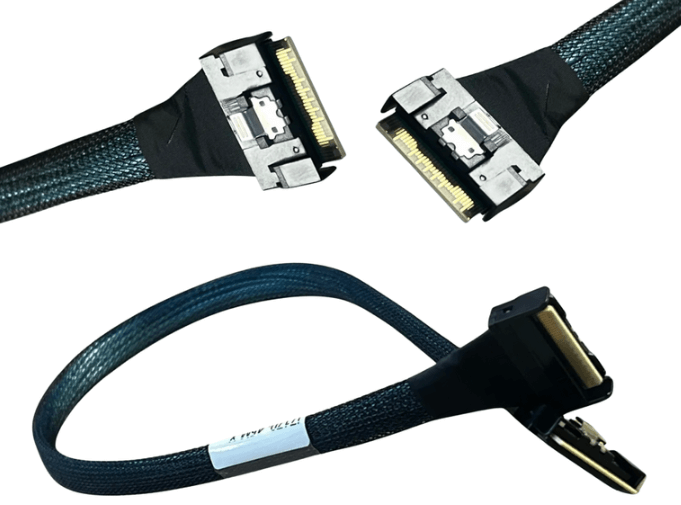

MCIO Becoming The Standard Connector For Modern Server Backplanes

MCIO is becoming the preferred connector in modern server backplanes because it was designed specifically for the electrical, mechanical, and density requirements of PCIe Gen 5 and beyond. As data center architectures shift toward higher lane counts, tighter layouts, and stricter signal integrity margins, older internal connector standards struggle to scale. MCIO addresses these limitations with a purpose built design optimized for next generation bandwidth and system density.

PCIe Gen 5 Pushes Existing Connectors To Their Limits

PCIe Gen 5 operates at 32 GT per second per lane, which dramatically tightens channel loss and noise budgets. At these speeds, connectors are no longer neutral elements. Each transition adds measurable loss, reflections, and crosstalk.

Legacy internal connectors were designed when signaling speeds were much lower. Even compact standards that worked well at PCIe Gen 4 often require short lengths and ideal routing to remain stable at Gen 5. MCIO was introduced to meet these higher frequency requirements without forcing excessive design compromises.

MCIO is Engineered for Signal Integrity at High Speeds

One of the primary reasons MCIO is gaining adoption is its signal integrity performance. The connector geometry, pin layout, and mating design are optimized to minimize impedance discontinuities and reduce crosstalk between adjacent lanes.

This matters most in dense backplanes where many lanes are routed in parallel. MCIO provides more usable margin at Gen 5 speeds, making it easier for system designers to pass compliance testing and maintain stability across temperature and load variation.

Higher Lane Density in a Smaller Footprint

Modern server backplanes must deliver more bandwidth without increasing physical size. MCIO supports multiple lane configurations, including x4, x8, and x16, within a very compact footprint.

This allows architects to aggregate more lanes per connector, reducing total connector count while maintaining or increasing throughput. Fewer connectors also mean fewer transition points, which further improves signal integrity and reliability.

Better Support for Cable Consolidation and Airflow

As systems grow denser, cable count becomes a mechanical and thermal issue. MCIO enables high bandwidth consolidation into fewer cables, which simplifies routing and improves airflow.

Thinner cable assemblies and fewer bundles reduce obstruction in front of fans and heat sinks. This is increasingly important in 1U and 2U systems where thermal headroom is limited and airflow efficiency directly affects performance.

Alignment with Modern PCIe Architectures

Current server designs rely heavily on PCIe switching, NVMe fabrics, accelerators, and composable infrastructure. These architectures favor wide links and short, high quality channels.

MCIO fits naturally into this model. Its support for wide lane groupings and clean internal routing makes it well suited for switch based backplanes, GPU interconnects, and NVMe heavy storage platforms.

Scalability Toward Future Standards

MCIO was developed with forward compatibility in mind. While it is widely deployed for PCIe Gen 4 and Gen 5, its electrical characteristics are intended to scale toward future generations.

This gives platform designers confidence that investing in MCIO based infrastructure today will not force a full connector redesign in the near term. As PCIe evolves, having margin at the connector level becomes increasingly valuable.

Reduced Design and Validation Risk

Passing compliance testing at Gen 5 is significantly more challenging than at previous generations. Marginal channels increase development time and risk late stage failures.

By using a connector standard designed for these speeds, engineers can reduce the amount of compensatory design work required elsewhere in the channel. This shortens validation cycles and improves first pass success rates.

Comparison to Older Internal Standards

While SlimSAS and HD MiniSAS remain useful in many systems, they were not created with Gen 5 as a primary design target.

SlimSAS performs well in Gen 4 and some Gen 5 scenarios but may require tighter constraints. HD MiniSAS is larger and less suited for dense, high frequency designs. MCIO addresses both density and speed simultaneously, which is why it is increasingly chosen for new platforms.

Where MCIO Adoption is Accelerating Fastest

MCIO is becoming standard most quickly in:

-

PCIe Gen 5 server backplanes

-

NVMe and NVMe over fabric systems

-

AI and accelerator dense platforms

-

PCIe switch based architectures

-

High density compute and storage nodes

In these environments, the benefits of MCIO directly translate into higher performance and lower integration risk.

When MCIO May Not Be Necessary

MCIO is not required in every system. Platforms operating at lower PCIe generations or with modest bandwidth needs may continue using existing connector standards effectively.

However, for new designs targeting longevity and maximum throughput, MCIO is increasingly the safest and most scalable choice.

FAQ (Frequently Asked Questions)

Is MCIO only for PCIe?

It is primarily used for PCIe, especially Gen 4 and Gen 5, though support for other protocols depends on system design.

Does MCIO replace SlimSAS entirely?

Not immediately. SlimSAS remains common, but MCIO is favored in new high speed backplane designs.

Is MCIO harder to route or assemble?

No. It is designed to simplify dense routing while improving signal integrity.

Will MCIO be relevant for PCIe Gen 6?

It is designed with future generations in mind, making it more likely to scale than older standards.